Why GPUs are necessary for deep learning?

This article is about GPU and its importance in the field of Deep Learning. Many state-of-the-art Deep learning networks wouldn’t be possible if not for GPU. Let’s learn about GPU but we have to start from basic i.e., CPU.

CPU

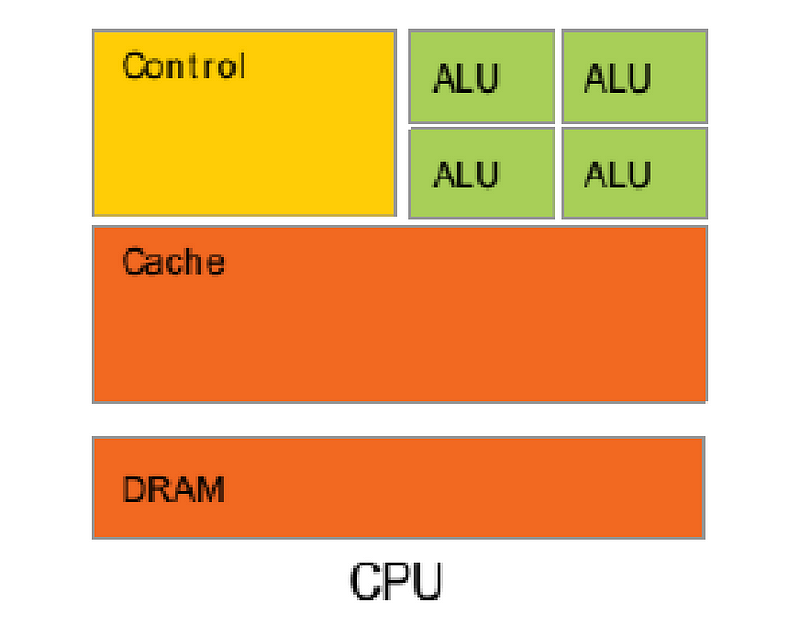

The Central Processing unit is the heart of a computer. All the operations you perform are controlled by CPU. A CPU interprets the program’s instructions and creates the output that you interface with when you’re using a computer. It consists of multiple Arithmetic Logic units (ALUs), single control unit, single cache memory and main memory. CPU is a microprocessor designed for latency optimization.

It consists of few powerful cores to perform multiple processes at a time. Processor cores are individual processing units within the computer’s CPU. Most computers now have multiple cores. Having the ability to run multiple tasks at a time like editing a document, watching a video, running a program etc. In the old days, every processor had just one core that could focus on one task at a time. Today, CPUs have been two to 18 cores, each of which can work on a different task.

Consider CPU as a ferrari car. It can fetch data from main memory much faster due to high latency. CPU has low bandwidth, it can fetch data at a faster rate but cannot process more data at a time. It needs to make frequent calls to main memory which is a memory-consuming process. The Ferrari car can go faster but it cannot accommodate high resources for transportation. It needs to go back and forth many times to complete a single big task. It has multiple ALUs, but it has only one control unit, so it can be used only for sequential operations. It is also limited in parallel processing since the number of cores a CPU has been built now is 18.

A computer’s processor clock speed determines how quickly the central processing unit (CPU) can retrieve and interpret instructions. This helps your computer complete more tasks by getting them done faster. The clock speed can be clocked in terms of gigabytes. It the CPU has one or two cores with high clock speed, it can load and interact with single application quickly. Multiple processors with lower clock speed means , it can load and interact with multiple applications but with the slower rate.

GPU

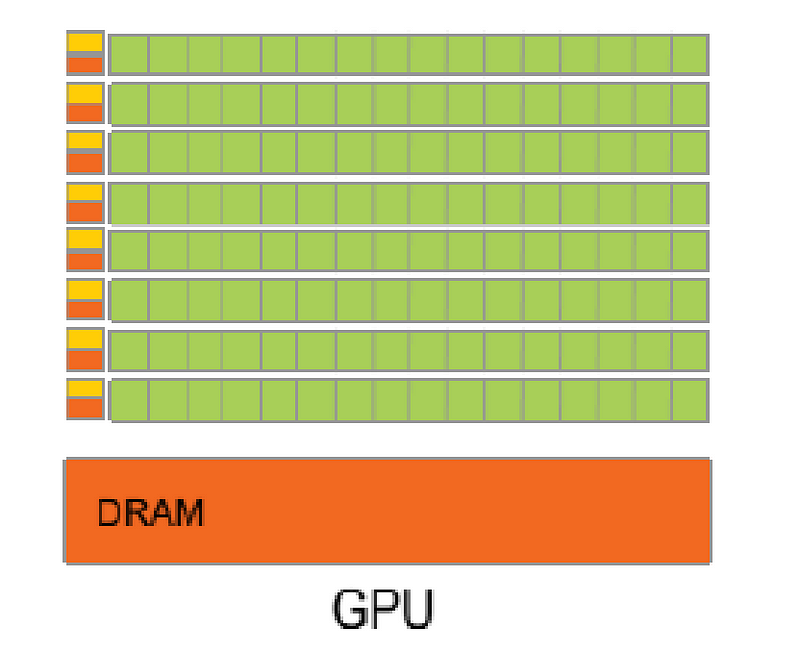

Graphics processing unit is built to overcome the performances lag of CPU. The GPU consists of thousands of ALUs , many control units to control those ALUs , many cache memory for each control unit and a main memory. It is designed for bandwidth optimization. The GPU is like a delivery truck. It cannot run faster but it can process more instructions at a time but at a slower rate thus increasing the throughput.

It possess low latency i.e the data loading time for GPU is much higher than CPU and also it cannot make frequent calls to read from memory. To tackle the latency disadvantage, we can use thread parallelism. So GPU processor will not wait for data loading and can reuse the data from another thread or for another thread.

GPU comprises thousands of weaker cores for computations. If you have high amount of data to transport from location 1 to location 2, GPU can be considered as similar as delivery truck. It will load many data at a time but it will process slowly due to weaker cores. But it will not make frequent calls to main memory. It just need a few calls to main memory for a specific task. Thus it can reduce the memory-consuming process of CPU. This is one of the main characteristics of GPU.

The clock speed of GPUs are much slower compared to CPU. It cannot be used for sequential instructions processing. GPU also have many small and fast registers for each blocks and are 30 times bigger than CPU. Many data can be stored in GPU.

Why GPU is considered superior to CPU ?

The GPU was first invented for seemingly rendering graphics on the computer. To render the graphics, there must be complex matrix multiplications and convolutions are performed inside the processor. If any object is seen movable in the screen, the object with the coordinates must be translated(moved) from one point to another point in a plane if you consider it as 3-dimensional object (x,y,z axis). To increase the size of any object in the screen, you must use scaling operation on the object’s coordinates. These operations are happening rapidly to show objects are movable. CPUs cannot process these much informations parallelly.

If you have played any high end game in the computer without GPU , you might have experienced glitch in graphics. CPUs are not able to handle complex operations rapidly. GPUs can do these complex operations in parallel and also very fast. A large matrix multiplication operation can be split into multiple smaller matrices and all the results can be combined. Since CPUs are limited in the number of cores, it cannot do rapid matrix computations for the large number of matrices. GPUs have thousands of cores to process the matrix operations with the help of thread parallelism.

CPU feeds data to GPU through read/write files from/to RAM/HDD during the process. This type of computing is called heterogenous or hybrid computing. GPUs can dedicate more of its transistors for computation horsepower and the CPU can concentrate on other tasks using its dedicated transistors. The threads and context switching are managed by hardware. We can also increase the parallelism by adding more processes.

Strength of CPU

- High Bandwidth

- Hiding Memory access latency by thread parallelism

- Registers that can be easily programmable.

Weakness of GPU

- CPU is required to transfer data into GPU card.

- It cannot be used for sequential operations (ex: mouse click, keyboard keys recording) due to low latency.

- GPU contains highest of 24GB RAM while CPU can reach 1TB of RAM.

GPUs in Deep Learning

In Deep Learning, the complex neural architecture can be created with 100s of layers and thousands of neurons at each layer. For training a large neural network, you might have to wait for weeks or months to get a result. Mostly you will not get the right output at first iteration. You will do multiple iterations by tweaking the architecture. Imagine training a network and waiting for a week and then tweak the architecture and then again waiting for a week to know the result. Tedious process.

During the training of convolution neural networks for image classification, there are numerous convolution, pooling operations involves in identifying the edges and important features of the image. Numerous matrix operations takes place at each layer though forward and backward propagation (Obviously you will be using a bigger dataset for image classification like GoogleNet or AlexNet). This way of computation is similar to GPU processing graphical instructions for rendering the pixels on the screen. So researchers tried using GPU for deep learning. And it came in flying colours.

Not only for CNN, but also in classification and sequential deep learning, GPUs can help accelerate the training. Example: If you have 100MB matrix, you can split it to smaller matrices to fit in cache memory and registers and then do matrix multiplication with smaller matrix tiles at speeds. GPU need not access main memory for the data at a higher rate like CPUs.

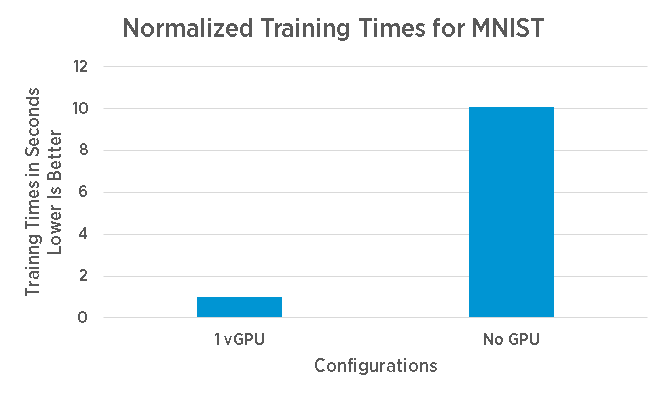

Stat: Training Time for MNIST dataset for handwritten digit recognition with and without GPU

What Next?

If you understood what is GPU and why it is useful in deep learning, you might have a question how to redirect the programs to run on GPU instead of CPU. Well, the answer is, you need to use the libraries provided by the Hardware companies which creates GPU. Nvidia is a technology company, inventor of GPU for graphics processing. They have created a programming language CUDA extending C++ and they provide libraries for directly let the programs run on GPU instead of CPU. The sequential operations will be run on CPU and the offload parallel computing can be run on GPU and the needed data will be transferred from CPU to GPU. There are other companies like Intel is a competitor for Nvidia. You can choose the GPU with your required specifications.

References

- https://www.researchgate.net/publication/325023664_Performance_of_CPUsGPUs_for_Deep_Learning_workloads

- https://www.nvidia.com/content/tegra/embedded-systems/pdf/jetson_tx1_whitepaper.pdf

- Learn CUDA for GPU computing. https://cuda-tutorial.readthedocs.io/en/latest/tutorials/tutorial01/

Comments

Post a Comment