Why you should add bias to the neural network?

Hi Everyone! This article is about why we should add the term “bias” in the neural network.

A Neuron is like a single node in the layer. A single layer contains n number of neurons. A single neural network may consist of n number of layers.

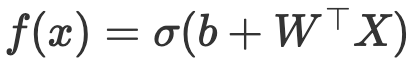

A single neuron’s job is to multiply the inputs with their weights, add bias to the sum and apply any activation functions to the end result.

The bias b is the small single value. Have you ever wondered why you have to add bias to the network?

Let's get back to School Maths:

Equation of the straight line

The basic equation of the straight line given by

y=mx+c

So why do we have y-intercept c there instead of just y=mx?

Let’s take the equation y=mx.

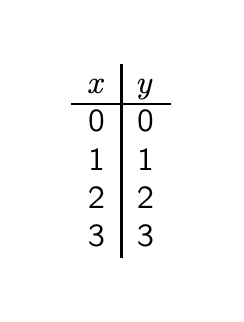

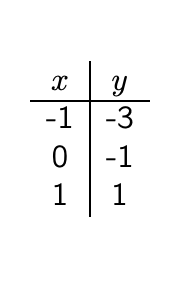

Example:

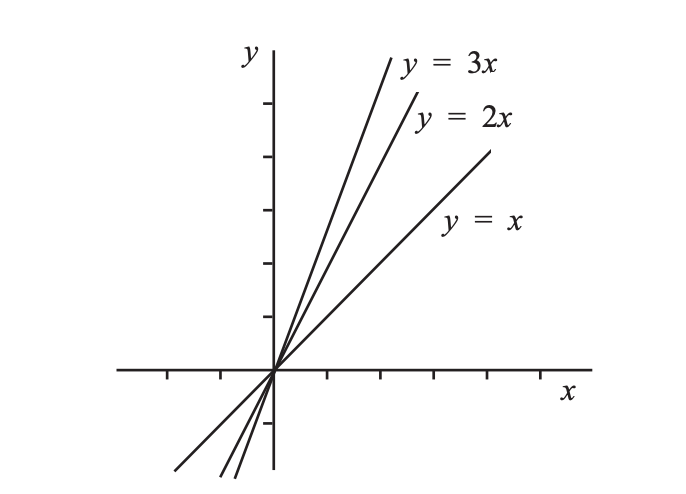

We have an equation of a line with y=x. We can calculate the slope between the points.

We can find the slope/gradient of the line for the point(1,1) with origin (0,0) using the equation

m=y2-y1/x2-x1

m=1–0/1–0

m=1

For equation y=2x, m=2

For equation y=3x, m=3

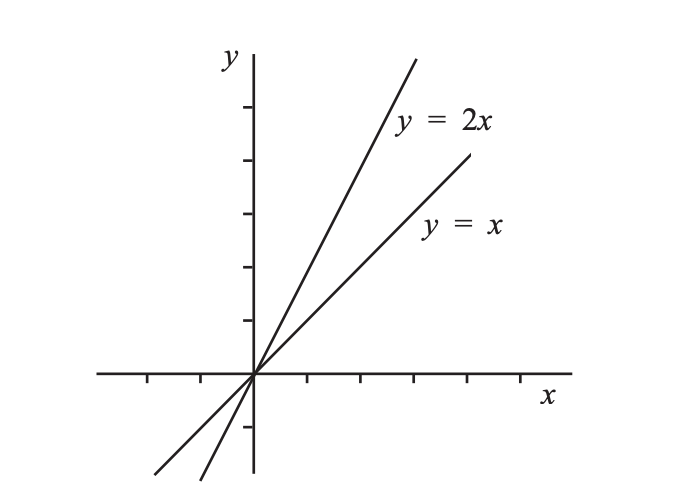

We can see a pattern here. All these lines have equations y equals some number multiple of x. In all cases, the line passes through the origin and the slope/gradient is given by the number multiplied with x.

So if we have equation y=11x, then m=11.

In general, the equation of the straight line y passes through origin with gradient m is given by

y=mx

Here, one point is always (0,0) and in practice, you have both points at non-zero locations (x1,x2) and (y1,y2).

So what should you do if the line should not pass through the origin?

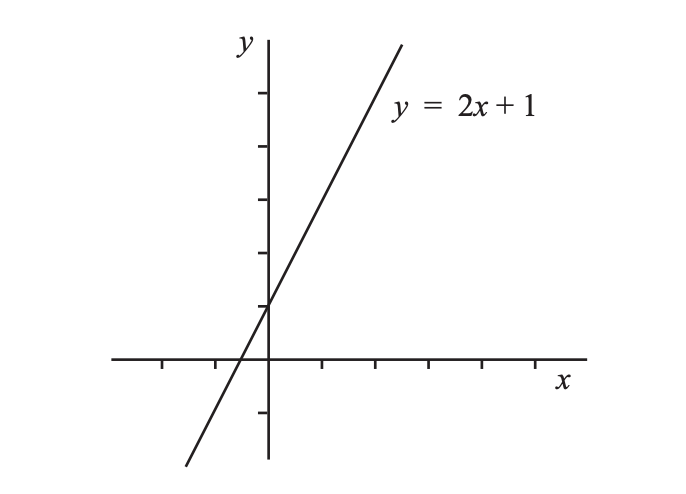

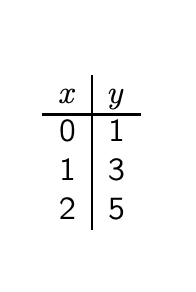

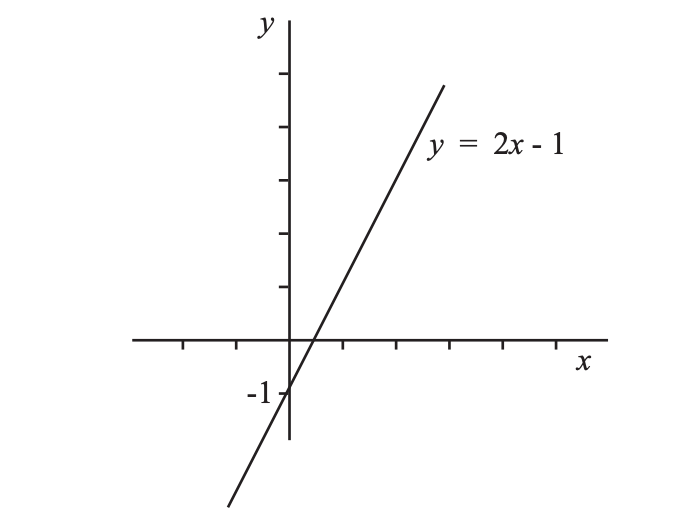

We have to add y-intercept c to the equation. Thus, shifting the curve upwards and downwards.

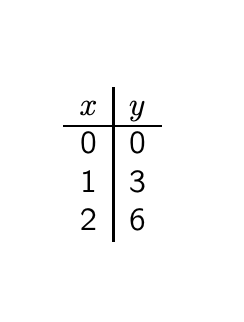

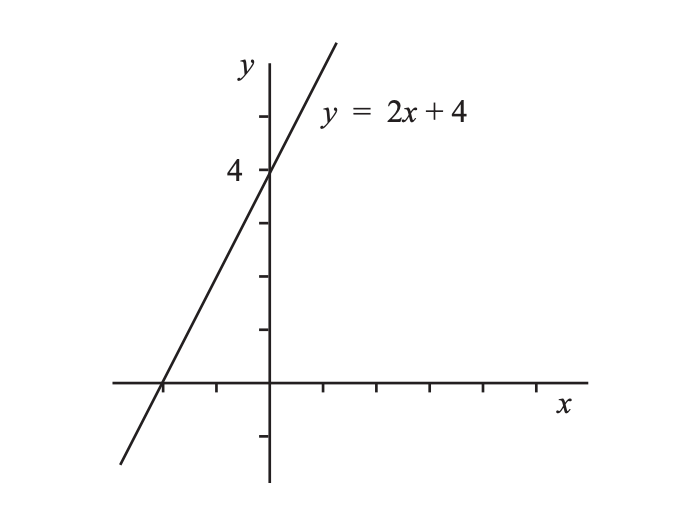

Example:

y=2x+1

y=2x-1

y=2x+4

How can we relate the y-intercept with bias?

While training the neural network, the errors are calculated and the weight updation happens through back-propagation. The errors can be in the positive direction and in the negative direction. Therefore, the bias will allow the activation functions to shift in either direction. With the help of bias, the model can find the best line that fits the data with freedom to pass through any point.

We can visualize it with the graph.

Here is the graph with activation functions without adding bias.

As you can see in the graph, you can see the steepness in the graph for the different values of weights but the curve always passes through the origin. But what if you want the network to output 0 when x=2? You need to shift the entire curve, right?.

That is what bias allows you to do.

Observe it. When w=-5, it shifts the curve to the right. In this way, we can train the network to output 0 when x=2 allowing the model to learn the best possible way to fit the data.

This is the basic reason behind adding the bias to the weighted sum.

Thanks!

Comments

Post a Comment